Iclr2020: Compression based bound for non-compressed network

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network - Download as a PDF or view online for free

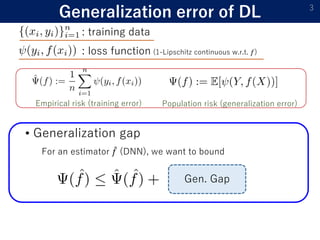

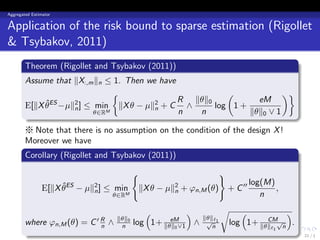

1) The document presents a new compression-based bound for analyzing the generalization error of large deep neural networks, even when the networks are not explicitly compressed.

2) It shows that if a trained network's weights and covariance matrices exhibit low-rank properties, then the network has a small intrinsic dimensionality and can be efficiently compressed.

3) This allows deriving a tighter generalization bound than existing approaches, providing insight into why overparameterized networks generalize well despite having more parameters than training examples.

ICLR 2020

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

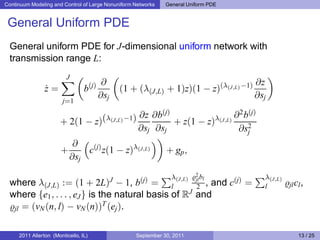

Continuum Modeling and Control of Large Nonuniform Networks

Iclr2020: Compression based bound for non-compressed network: unified generalization error analysis of large compressible deep neural network

PAC-Bayesian Bound for Gaussian Process Regression and Multiple Kernel Additive Model

Continuum Modeling and Control of Large Nonuniform Networks

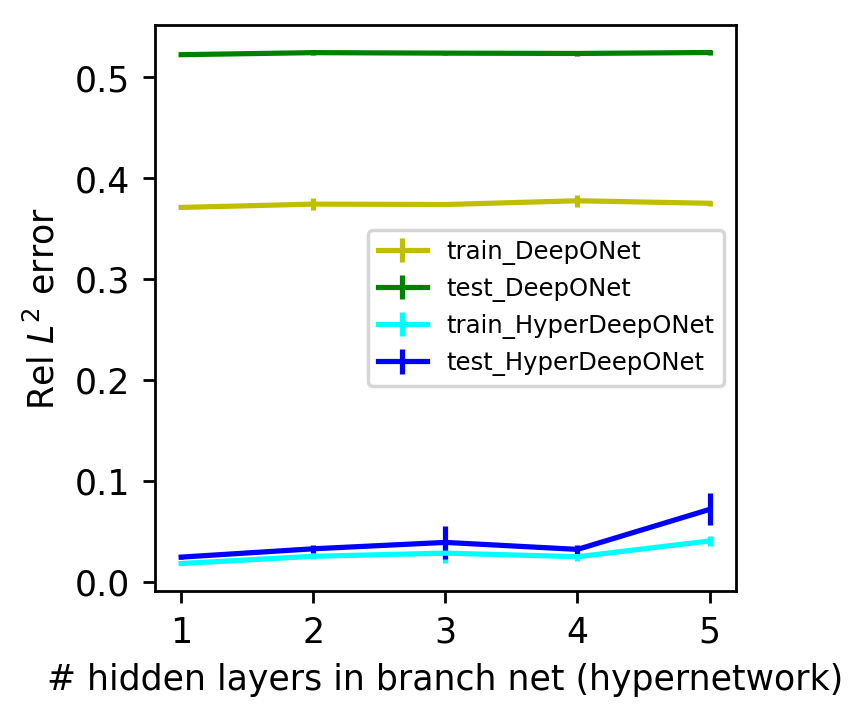

HyperDeepONet: learning operator with complex target function space using the limited resources via hypernetwork

Discrete MRF Inference of Marginal Densities for Non-uniformly Discretized Variable Space

Minimax optimal alternating minimization \ for kernel nonparametric tensor learning

ICLR 2020 Statistics - Paper Copilot

Publications

ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradient descent

Higher Order Fused Regularization for Supervised Learning with Grouped Parameters

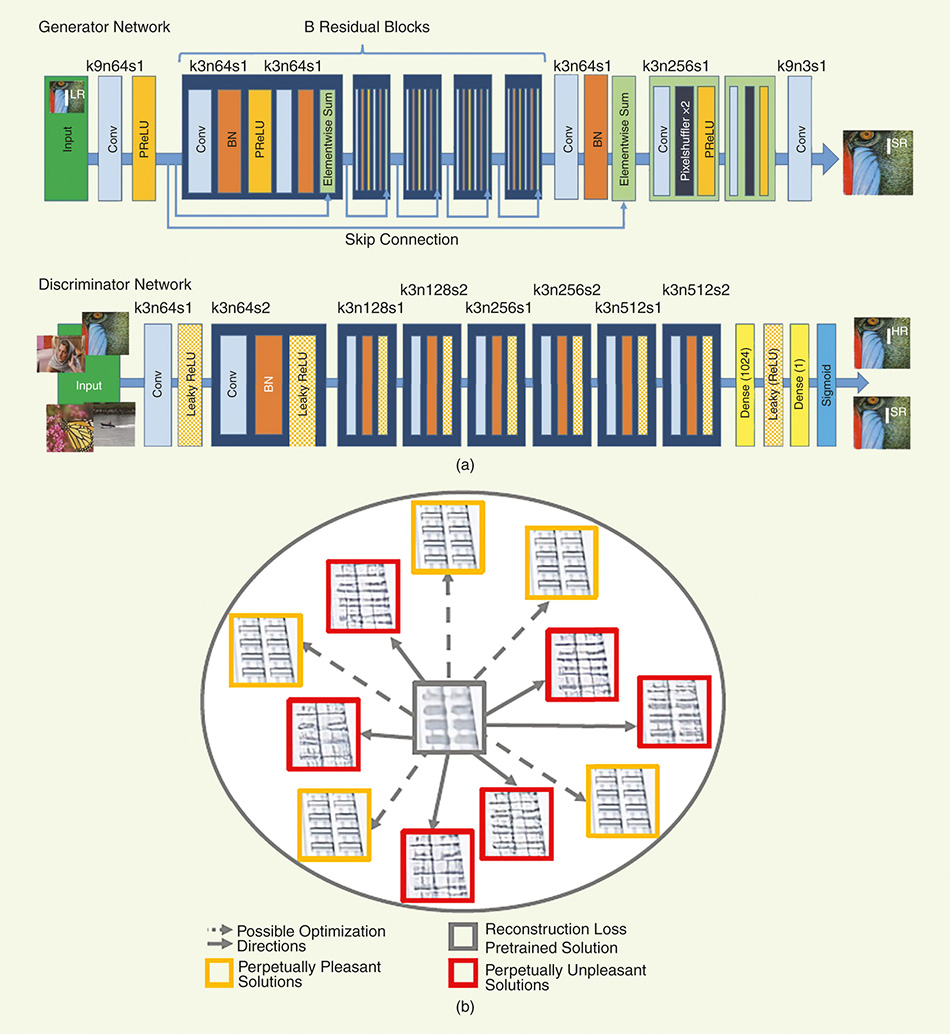

Superresolution Image Reconstruction

NeurIPS 2021 Lossy Compression For Lossless Prediction Paper, PDF, Data Compression