DistributedDataParallel non-floating point dtype parameter with

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

Aman's AI Journal • Primers • Model Compression

源码解析] 模型并行分布式训练Megatron (2) --- 整体架构- 罗西的思考- 博客园

distributed data parallel, gloo backend works, but nccl deadlock · Issue #17745 · pytorch/pytorch · GitHub

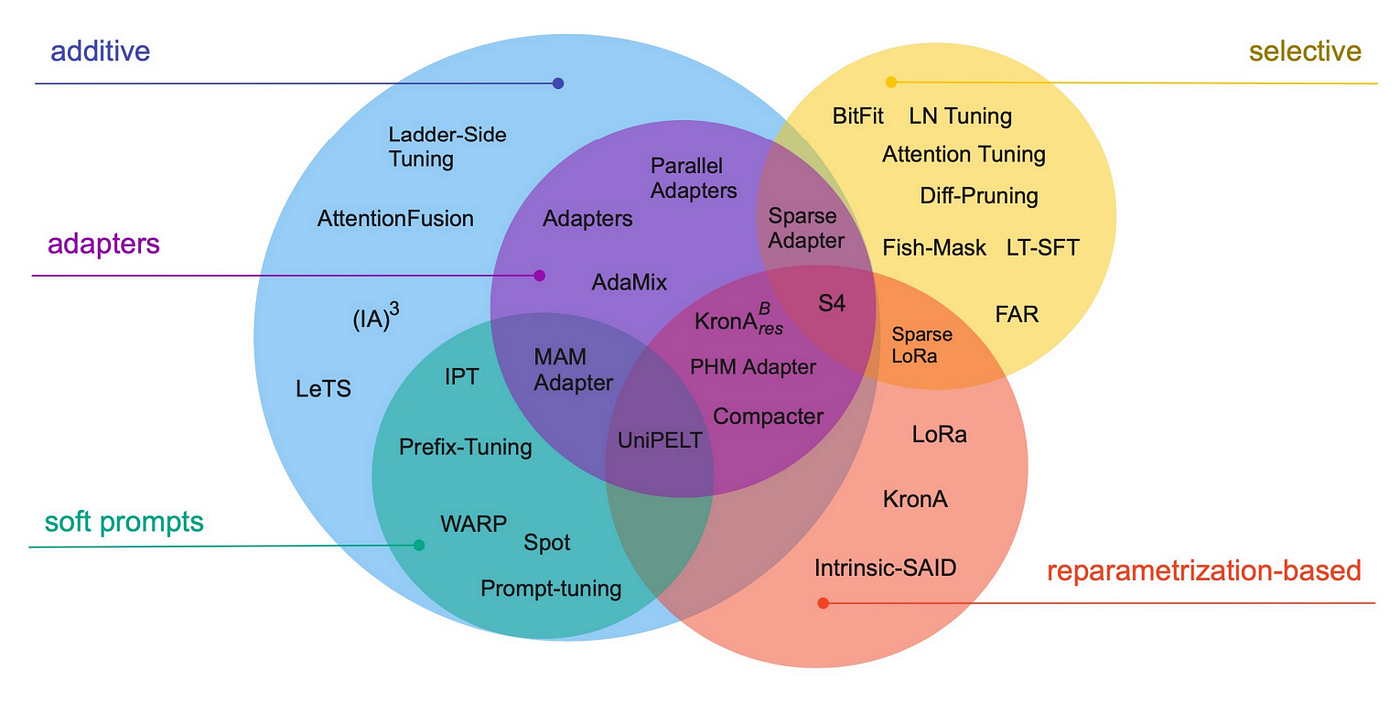

Finetune LLMs on your own consumer hardware using tools from PyTorch and Hugging Face ecosystem

Configure Blocks with Fixed-Point Output - MATLAB & Simulink - MathWorks Nordic

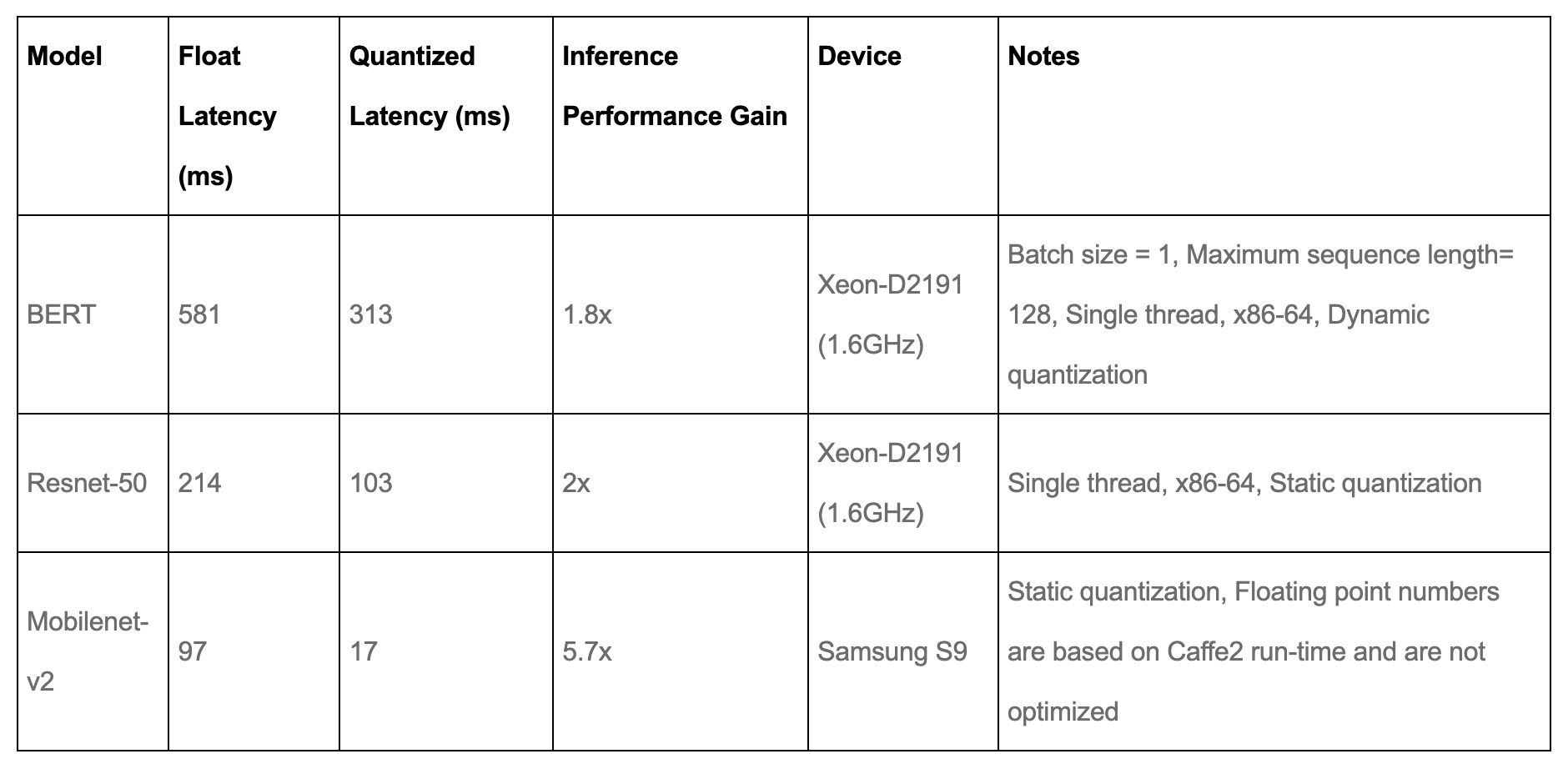

Distributed PyTorch Modelling, Model Optimization, and Deployment

Error with DistributedDataParallel with specific model · Issue #46166 · pytorch/pytorch · GitHub

Pytorch Lightning Manual Readthedocs Io English May2020, PDF, Computing

How to estimate the memory and computational power required for Deep Learning model - Quora