MPT-30B: Raising the bar for open-source foundation models

Introducing MPT-30B, a new, more powerful member of our Foundation Series of open-source models, trained with an 8k context length on NVIDIA H100 Tensor Core GPUs.

MPT-30B: Raising the bar for open-source foundation models : r/LocalLLaMA

MPT-30B: MosaicML Outshines GPT-3 With A New LLM To Push The Boundaries of NLP

Guide Of All Open Sourced Large Language Models(LLMs), by Luv Bansal

Computational Power and AI - AI Now Institute

Survival of the Fittest: Compact Generative AI Models Are the Future for Cost-Effective AI at Scale - Intel Community

-min.png)

The List of 11 Most Popular Open Source LLMs of 2023 Lakera – Protecting AI teams that disrupt the world.

MPT-30B: Raising the bar for open-source foundation models : r/LocalLLaMA

The List of 11 Most Popular Open Source LLMs of 2023 Lakera – Protecting AI teams that disrupt the world.

Guido Appenzeller on LinkedIn: MPT-30B: Raising the bar for open-source foundation models

The List of 11 Most Popular Open Source LLMs of 2023 Lakera – Protecting AI teams that disrupt the world.

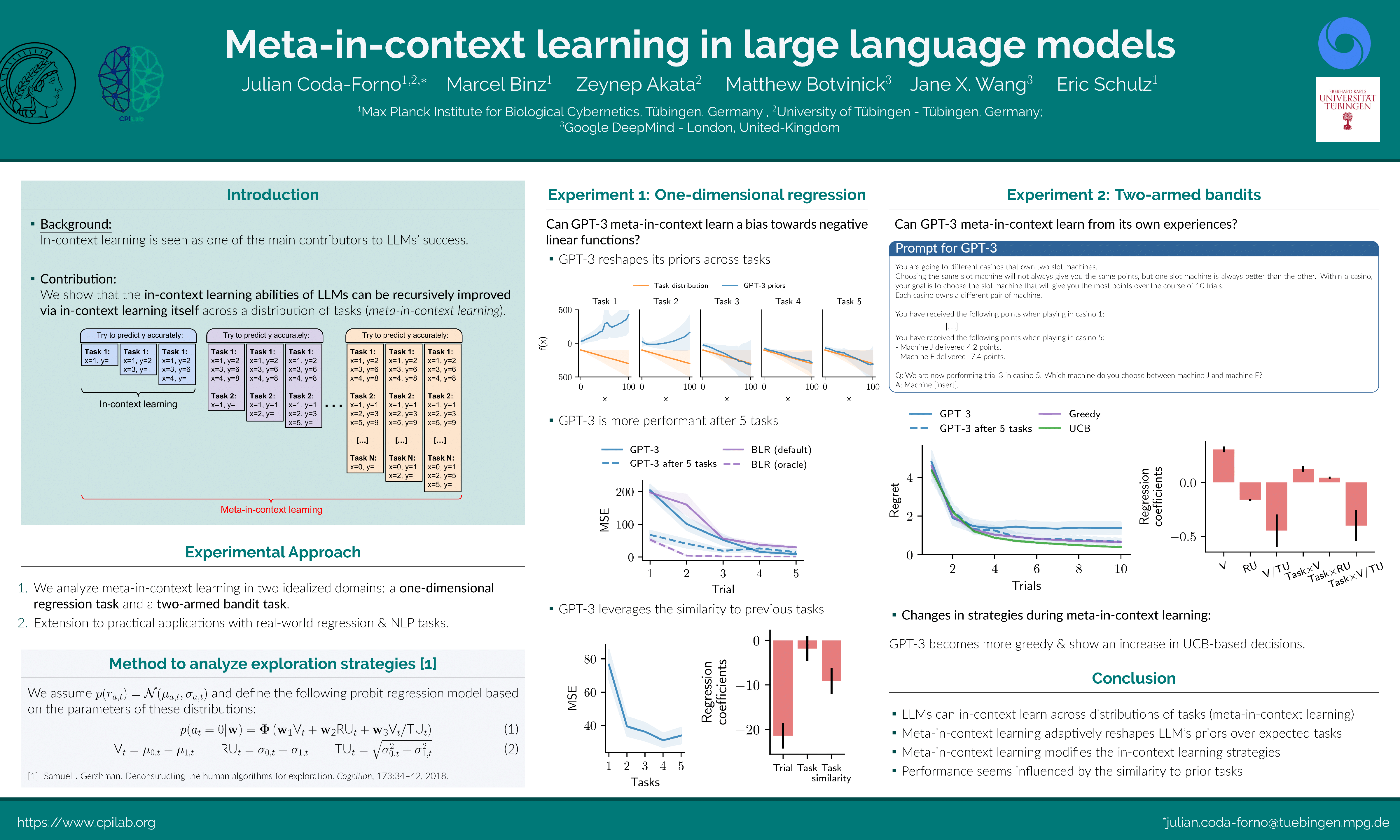

NeurIPS 2023

Announcing MPT-7B-8K: 8K Context Length for Document Understanding