Using SHAP Values to Explain How Your Machine Learning Model Works

Using SHAP with Cross-Validation in Python, by Dan Kirk

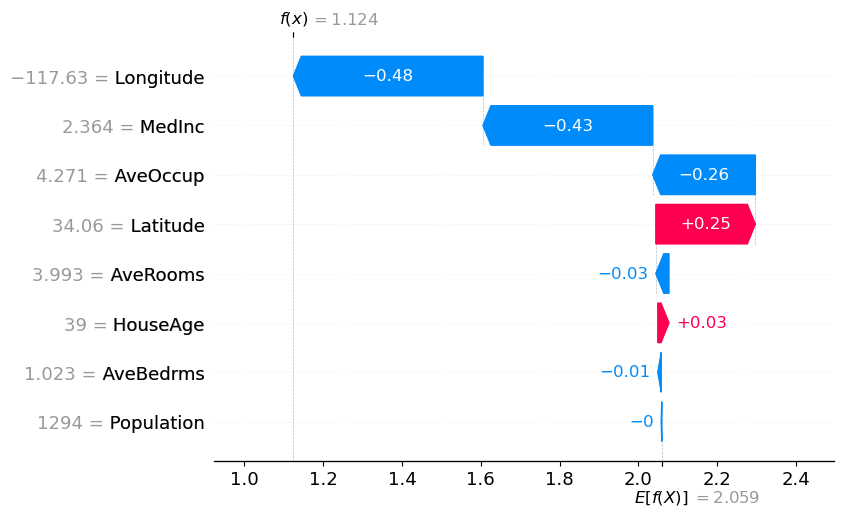

An Introduction to SHAP Values and Machine Learning Interpretability

Sensors, Free Full-Text

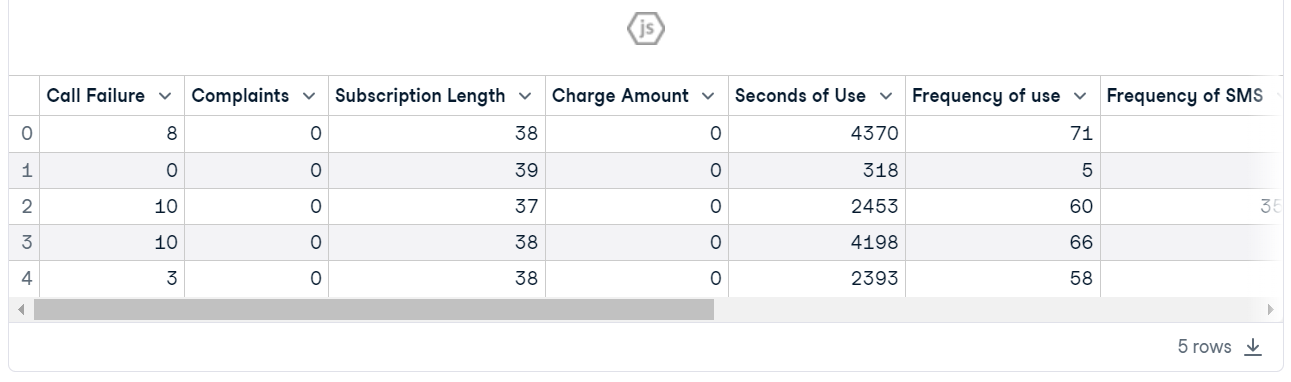

Is your ML model stable? Checking model stability and population drift with PSI and CSI, by Vinícius Trevisan

Introduction to Explainable AI (Explainable Artificial Intelligence or XAI) - 10 Senses

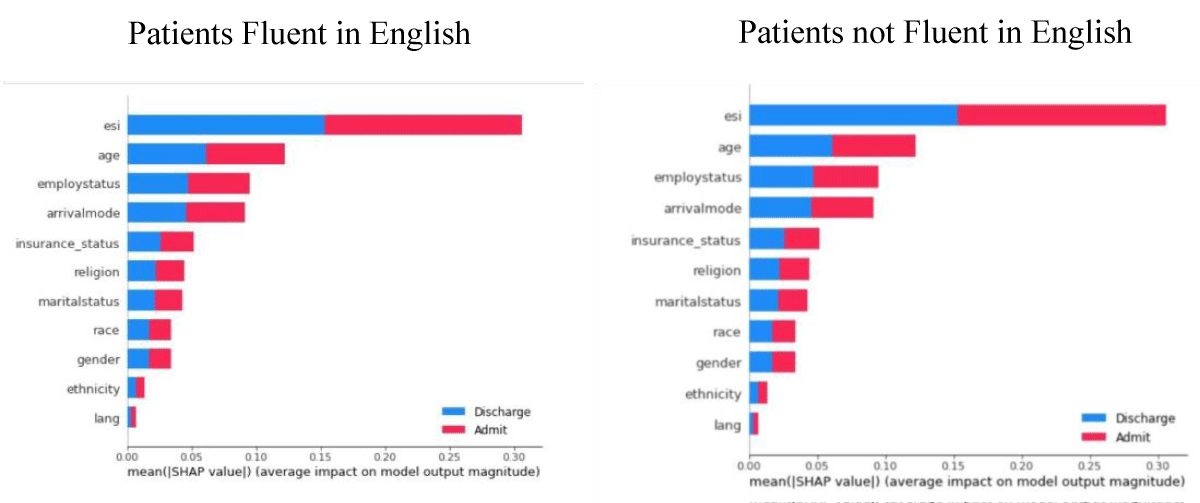

Using Model Classification to detect Bias in Hospital Triaging

List: SHAP, Curated by Dmor Idesign

Explain Your Model with the SHAP Values, by Chris Kuo/Dr. Dataman, Dataman in AI

A Game Theoretic Framework for Interpretable Student Performance Model

Explain Your Model with the SHAP Values, by Chris Kuo/Dr. Dataman, Dataman in AI

Empowering Responsible AI through the SHAP library

An Introduction to SHAP Values and Machine Learning Interpretability

GitHub - aarkue/eXdpn: Tool to mine and evaluate explainable data Petri nets using different classification techniques.

Introduction to Explainable AI (Explainable Artificial Intelligence or XAI) - 10 Senses

Is it correct to put the test data in the to produce the shapley values? I believe we should use the training data as we are explaining the model, which was configured